RULA Assessment - Depth Sensors Vs Machine Vision Algo

Collaborators affiliations:

- Department of Sciences and Methods for Engineering, University of Modena and Reggio Emilia, Reggio Emilia, RE 42122, Italy

- CerebrumEdge Technologies, Bangalore, India

We compared the effectiveness of a commercial machine vision (MV) algorithm (ErgoEdge) based on an RGB camera against an application based on Microsoft Azure Kinect depth camera (AzKRULA) for RULA evaluation. Fifteen static postures were evaluated with both systems and compared with those of an ergonomics expert showing a substantial agreement among the three evaluations.

AzKRULA

To calculate RULA score for each frame we used Azure Kinect Body Tracking SDK (Microsoft, 2021a), which is an algorithm based on CNNs that retrieved 32 joints in the 3D space as reported in Figure 1

ErgoEdge

ErgoEdge is a commercial solution designed for ease-of-use and comprehensive assessment at worksites. A normal smartphone video is processed through the deep learning models to estimate RULA. A demo of the software can be found at (CerebrumEdge, 2021).

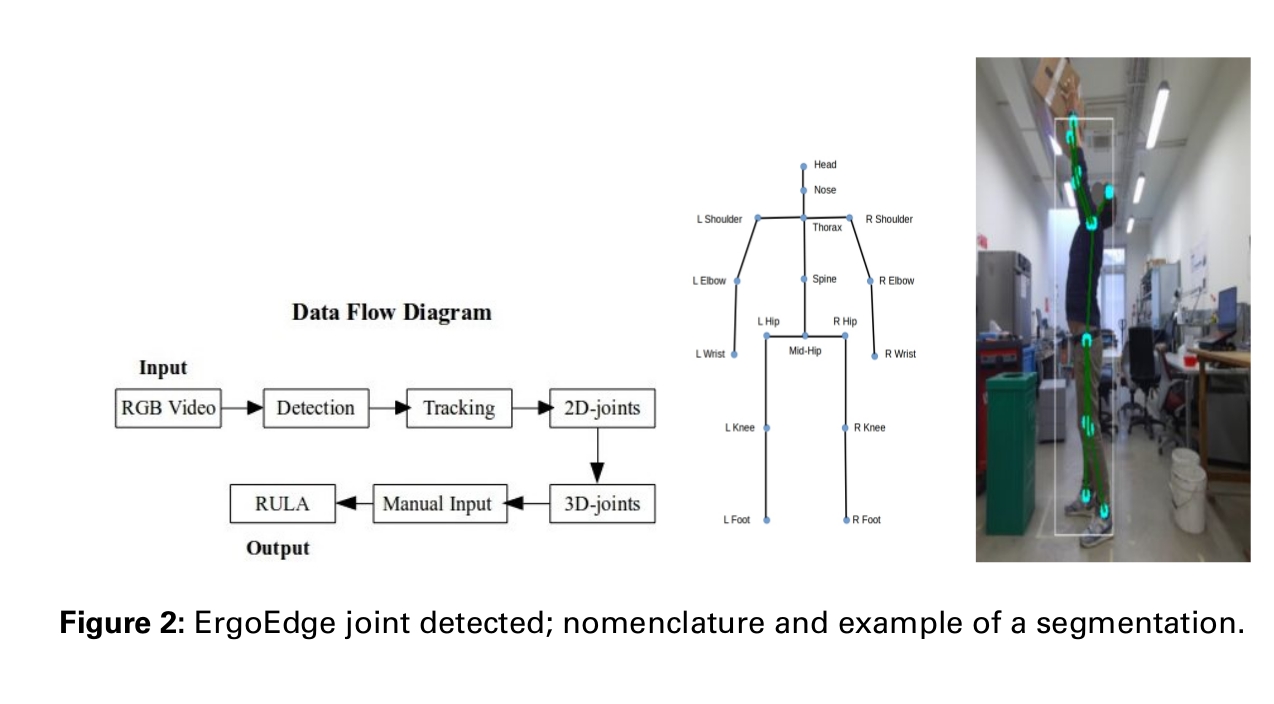

Figure 2 shows the data flow diagram, the joints detected by ErgoEdge and their nomenclature as well as an example of a segmentation performed on a well-known static posture.

Conclusions

- The MV algorithm based on simple RGB data (i.e., ErgoEdge) can be exploited for RULA evaluation as both the expert and AzKRULA were in substantial agreement with it. However, ErgoEdge has limitations in calculating the joint angles from frontal postures suggesting that when using ErgoEdge side views should be used of the principal parts of the body.

Full paper is available here.

More information about ErgoEdge: ErgoEdge